Environment

In this setup, UDOO will be both a master and a worker. Another worker will be on a Raspberry Pi. A user account "spark" is created on both machines. Each machine has Apache Spark placed under its home directory (e.g. /home/spark/spark-1.4.1-bin-hadoop2.6).

For reference, my UDOO is running Arch Linux (kernel 4.1.6) with Oracle JDK 1.8.0_60, Scala, 2.10.5, and Python 2.7.10. Hostname is "maggie".

The Raspberry Pi (512MB Model B) is running Raspbian (kernel 4.1.6) with Oracle JDK 1.8.0, Scala 2.10.5, and Python 2.7.3. Hostname is "spark01". (Note that you may want to modify the line in /etc/hosts that points 127.0.0.1 to the hostname. Change it to point to the IP address of eth0 instead. Otherwise Spark may bind to the wrong address)

Setup

First we need to enable auto-login of ssh with key-pair as Spark master will need to login to slaves. On UDOO (the master), login as spark and execute the following command to generate keys

$ ssh-keygen -t rsa -b 4096

Use empty password (just press Enter) when prompted to encrypt the private key. Then we need to copy the public key as "authorized_keys" on both UDOO (as UDOO will run as one of the slaves) and Raspberry Pi

$ cp ~/.ssh/id_rsa.pub ~/.ssh/authorized_keys $ chmod go-r-w-x ~/.ssh/authorized_keys $ ssh-copy-id spark@spark01

Enter password when copying the key to spark01 (the Raspberry Pi). Once it is done, try to ssh to spark01 again and no password should be needed to login.

Once ssh is configured, we can setup Spark itself.

Under the conf folder of Spark, create a file called slave to list our Spark worker machines

spark01 localhost

Note that the second line is "localhost", which will start a worker locally on UDOO.

Also on the master machine, copy conf/spark-env.sh.template as conf/spark-env.sh and uncomment / edit the followings.

SPARK_MASTER_IP=192.168.1.10 SPARK_WORKER_CORES=2 SPARK_WORKER_MEMORY=256m SPARK_EXECUTOR_MEMORY=256m SPARK_DRIVER_MEMORY=256m

SPARK_MASTER_IP points to the address of my UDOO. The number of worker cores is set as 2 since we will be running the master on UDOO too so better not let it uses all 4 cores by default. Worker memory set as 256MB. For other options, refer the Spark site.

Create the same conf/spark-env.sh on Raspberry Pi. For my Raspberry Pi B with only 1 core, I changed SPARK_WORKER_CORES to 1. If you have multiple slaves to deploy to, you may use scp to copy them (and test out the no-password-login of ssh at the same time):

$ scp ~/spark-1.4.1-bin-hadoop2.6/conf/spark-env.sh spark@spark01:spark-1.4.1-bin-hadoop2.6/conf/ $ scp ~/spark-1.4.1-bin-hadoop2.6/conf/spark-env.sh spark@spark02:spark-1.4.1-bin-hadoop2.6/conf/ $ scp ~/spark-1.4.1-bin-hadoop2.6/conf/spark-env.sh spark@spark03:spark-1.4.1-bin-hadoop2.6/conf/ ...

The cluster should be ready by now. We can start the master and slaves by running commands on the master

$ sbin/start-all.sh

You can also start master and individual slaves separately. Refer to the Spark document for other commands.

Check the console and logs folder for any error messages. Strangely, on my setup, the console has error messages but in fact both master and slaves are ok.

...... failed to launch org.apache.spark.deploy.master.Master full log in /home/spark/spark-1.4.1-bin-hadoop2.6/sbin/../logs/spark-spark-org.apache.spark.deploy.master.Master-1-maggie.out ...... spark01: failed to launch org.apache.spark.deploy.worker.Worker: spark01: full log in /home/spark/spark-1.4.1-bin-hadoop2.6/sbin/../logs/spark-spark-org.apache.spark.deploy.worker.Worker-1-spark01.out ......

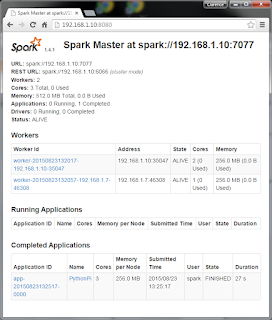

To make sure everything is working, point your browser to the cluster web UI on the master node, e.g. http://192.168.1.10:8080/

You should see two workers connected to the cluster. (Wait for a minute or two, it takes some time for the worker on Raspberry Pi to startup).

We can then submit a job to the cluster, e.g.

$ bin/spark-submit --master spark://192.168.1.10:7077 --executor-memory=256m examples/src/main/python/pi.py 10

(Note the use of --executor-memory. Since I configured my slaves with worker memory of 256MB only, I need to limit the application memory when submitting job too. Otherwise, there will be no appropriate worker to pick up the jobs)

Refresh the web UI to monitor the progress.

On my setup, the UDOO workers usually pick up application much quicker than Raspberry Pi, so with simple test like this, no job will be run on Raspberry Pi at all. Remove UDOO from the conf/slaves list to test only the Raspbery Pi if necessary.